Featured similes

Go Back to Top

Definition of west

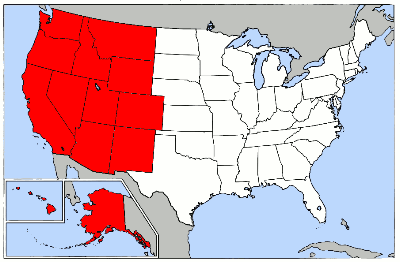

west - adj. situated in or facing or moving toward the west; adv. to, toward, or in the west; noun the countries of (originally) Europe and (now including) North America and South America; the region of the United States lying to the west of the Mississippi River; English painter (born in America) who became the second president of the Royal Academy (1738-1820); United States film actress (1892-1980); British writer (born in Ireland) (1892-1983); the cardinal compass point that is a 270 degrees.

West on: Dictionary Google Wikipedia YouTube (new tab)